Most developers analyze their system design by designing a system that keeps latency constant as throughput increases. Of course, this definition is correct, but the point is that in this regard, more attention is paid to the scaling category (focusing on horizontal scaling) and all the solutions offered are in line with this concern. And the point that always remains is the consistency and concurrency category. Many developers forget that for all software with a very serious and important problem that lurks like a hidden danger to seriously affect constancy and throughput. The name of the problem? concurrency

Now the point is that all the solutions proposed to solve this problem neutralize and make ineffective all or at least a large part of the measures taken to scale and increase throughput

But...

Wait

Is this really the case?

In this article , we provide a practical, step-by-step guide to making software systems scalable , from a single-server setup to a fully distributed architecture.

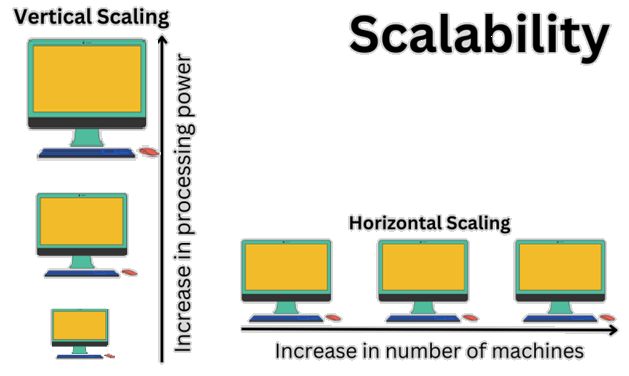

It begins with the fundamentals of I/O performance, explaining how hardware limitations such as disk speed and network latency affect system throughput. Then it explores progressive scaling strategies, including vertical and horizontal scaling, caching, asynchronous processing, and load balancing.

Finally, it addresses the deeper challenges of distributed systems . data consistency, CAP theorem, replication, sharding, and event-driven communication .

offering clear, real-world approaches for designing resilient, high-performance application